The Ordinariness of AIDS

Can a disease that tells us so much about ourselves ever be anything but extraordinary?

The AIDS epidemic is now 25 years old. Early in the epidemic Susan Sontag forecast a day when AIDS—“the disease most fraught with meaning,” she called it—would become an ordinary illness. But can AIDS be ordinary?

Something like ordinariness has happened to cancer, and perhaps that is what Sontag had in mind. Cancer has not changed: if cancer’s suffering feels like the “night-side of life,” as Sontag put it in Illness as Metaphor, the darkness is no longer secret. The cancer metaphor has not yet gone out of use, but when it is used, it no longer seems to signify what she termed “our reckless improvident responses to our real ‘problems of growth.’” Cancer is still too prevalent a way of American death and too troubled a way of life for many of us to consider it a commonplace illness. But cancer patients are no longer lied to about their diagnoses, as was once the standard practice. People no longer speak of malignancy in whispers. Cancer no longer seems to define the character of the sufferer and, increasingly, we are recognizing that a tumor is not anybody’s fault.

In the early years of AIDS, it was easy to think that the disease might follow a similar trajectory. Sontag urged that, once the malady came to be understood medically and had become treatable, we should dismiss the dangerous metaphors then stigmatizing its sufferers and open the way for a tolerant climate of care and caring. Understanding and treatment did arrive, perhaps quicker than anyone would have predicted back in the AIDS-dark 1980s. In this country, acquiring the AIDS virus has become fairly rare. With fewer than 40,000 new occurrences per year, human immunodeficiency virus (HIV) infection occurs less often today than gonorrhea or influenza (although new-infection rates are higher—one percent per year or so—among gay men in some parts of the country, and incidence sporadically jumps in certain other subpopulations from time to time). Doctors today can treat AIDS effectively: annual mortality from the disease is less than half that of motorvehicle deaths in the United States; even fewer would die of AIDS if more people with the virus had better health care. But while medical science now knows so much about it, AIDS persistently demands our attention. It appears in our discussions of foreign policy, aid to poor countries, the crisis in American health care, religion, morality, education, marriage, art. AIDS remains extraordinary.

If AIDS could be ordinary, perhaps it would be so in New York City. New York is probably where the human immunodeficiency virus made its American landfall, sometime in the 1970s. By the time AIDS was recognized officially (in a June 1981 publication by the federal Centers for Disease Control), the gay press in New York had already picked up the rumors of a new disease; speculation about “gay cancer” percolated among New York’s homosexuals. In New York, certainly, are the high numbers. By 1983, New York was already producing half the AIDS cases in the nation. More than 1,000 New Yorkers were diagnosed with AIDS that year and the new-case rate was doubling every 15 to 20 months. Between then and now, about one out of every seven American deaths from AIDS has been recorded in New York City. San Francisco’s roughly 18,000 AIDS deaths to date represent a larger loss relative to the city’s population, but San Francisco is a far smaller city. No other U.S. city has seen the massive toll of illness and death exacted by AIDS in New York, where 84,000 people have died.

And the statistics trace only the story’s surface; beneath those statistics is turmoil: funerals, fundraisers, a quarter-century of antidiscrimination suits, gay bashings, hospital-bed shortages, insurance-benefit crises, health-carepolicy changes, religious disputes, blood throwing, racial politics, and very long lists of names read aloud at the AIDS Day ceremony in the Cathedral of St. John the Divine every December first. New Yorkers certainly have had the chance to learn from AIDS about the needs for social tolerance and a climate of caring.

But not even in New York City, it seems, is AIDS an ordinary illness. Back in February 1982, when a reporter for the New York Native asked then– commissioner of health David Sencer if he would inform the public about the new illness (AIDS hadn’t been named yet) through the mass media, the commissioner replied that the disease was not suitable for such an approach. Exactly 23 years later, on February 11, 2005, the current commissioner issued an official health alert—a communiqué usually reserved for epidemic threats— and then went on television to bring home the nature of the danger.

It’s not surprising that the ranking health official in one of America’s leading AIDS cities would have learned the lesson that mass media is indeed suitable, perhaps even indispensable, for getting word out about epidemic dangers. The surprise was that the epidemic alert was elicited by the discovery of a single case of disease, not by an epidemic at all. The greater surprise was that it sparked a round of sermonizing on the part of officials that, in its turn, enraptured the media. When one case of AIDS can claim headlines for days in a city as large and AIDS-experienced as New York, ordinariness seems far away.

The specific information about the February 2005 case was sparse: one man, infected with a version of HIV that was resistant to many of the main antiviral drugs, was found to have progressed from HIV infection to AIDS diagnosis unusually quickly. Reaction to the report of this occurrence was immediate and overwrought. While AIDS organizations and a few scientists offered cogent commentary, New York mayor Michael Bloomberg asserted that unprotected sex is “a sin in our society.” The New York Times opined that “risky behavior” should be avoided “like the plague.” A jeremiad on sex, drugs, and risk rang forth from editorial writers in New York and around the country. Much of the reaction, like Bloomberg’s comment, contained a germ of truth, a reminder that certain activities might be likely to spread dangerous viruses, HIV among them. But much of the response, also like the mayor’s remark, was cast in language not customary for civics lessons.

Was the case so unprecedented or so self-evidently threatening to the public’s health? Multi-drug HIV therapy made its debut about 10 years ago, and almost right away scientists began warning that drug-resistant viral strains would emerge sooner or later. By 2005, strains resistant to more than one antiviral drug were well documented. So the capacity of this man’s viral strain to evade the effects of a number of conventional anti-HIV drugs, while rare, was not unexpected. That the man’s HIV infection apparently progressed to AIDS so quickly was also not unheard of: AIDS develops very rapidly in a small percentage of HIV-infected people—a consequence, it seems, of a chance propitious interaction between the virus and the biochemical and molecular makeup of the person. That these two low-probability events should occur in the same individual was also perfectly predictable. Among the tens of thousands of HIV infections and AIDS cases diagnosed each year in the United States, it would be surprising if the 1-in-10,000 long shot did not come in from time to time.

What was remarkable about the furor was not the response to the discovery of an anomalous AIDS case. Indeed, it is never merely in the response to an epidemic threat that society’s concerns are reflected, not in the interpretation of illness or the narrative about an epidemic. The commissioner’s health alert, the mayor’s peroration, the media’s uptake—all are only individual sentences in a larger chapter. The epidemic, I mean, is the narrative. The history of any epidemic disease is a social palimpsest. At the core is a disease, of course. But there is no epidemic Ding-an-sich; the epidemic is a narrative. On it are imprinted the terms of the culture.

A disease crisis inevitably brings disquieting thoughts of mortality. The epidemic reveals the culture’s contemplation of death. The Todtentanz, or Danse Macabre, became a popular motif in visual art during the disastrous European plague epidemic of the 1350s. In the dance, Death, fickle and treacherous, appears as a great leveler of the social classes. Death’s indiscriminateness is a theme in Micco Spadaro’s painting The Piazza Mercatello During the Plague of 1656 and, to my eye, in Nicolas Poussin’s Plague at Ashdod. Henry Fox, Daniel Defoe’s narrator in A Journal of the Plague Year, is explicit about the greater capacity of the rich than of the poor to escape the epidemic, but he notes, too, the class-free equality of its direst effects. The rich and the poor, he tells us, go together into the plague pit. Sudden mortality disrupts the expected cycles of family life, another trope in the narrative. Some British engravings of the plague of 1665–66 feature an infant trying to suckle at its mother’s lifeless breast. The theme reappears in our day, only slightly less heartrendingly, in the discussions about the many children orphaned by AIDS.

In contrast to those affective accounts are the statistical narratives of epidemic deaths. Sometimes, it seems, we turn to statistics as a kind of collective sublimation, a reworking of mortal fear into numbers. The Bills of Mortality, conceived in Milan in 1452 while plague was a frequent visitor to that city, were adopted widely thereafter. The City of London had them compiled and published weekly beginning before 1620; Samuel Pepys reads them unfailingly as the Great Plague advances through London in the spring and summer of 1665, watching for signs that the epidemic might be beginning to wane. My depiction of AIDS in New York, above, began with statistics; to an epidemiologist, numbers are the start of a story. Society’s objects of horror or revulsion, its taboos and its zones of privilege, figure in the story, too. The cholera outbreaks of the mid-19th century were famously attributed to excessive alcohol use, those allegations often implicitly, or not so implicitly, directed at the Irish poor. Late-19th century American commentary on tuberculosis, whose causative germ had then only recently been identified, focused on filth, and dust in particular; as Nancy Tomes describes in The Gospel of Germs, the era’s obsession with cleaning was rationalized as a preventive against the great disease threats of the day. The immigrant as disease carrier is a common figure in the epidemic narrative: Randy Shilts was playing on a long-popular concept when he created Patient Zero in And the Band Played On, although in the more traditional version the infectious immigrant, unlike Shilts’s flight attendant, eats funny food and doesn’t speak the language.

What do we mean, then, when we call something an epidemic? A plague? To say that AIDS is a plague, as some people do, implies different meanings. It seems devastating. It seems indomitable. It is the fault of someone else: we use the word plague in order to blame our troubles on others. And all uses of plague allude to the great epidemics of Europe, particularly the catastrophic outbreak of Black Death in the 14th century.

To the epidemiologist of today, plague is a zoonosis, that is, an animal disease, usually affecting wild rodents, especially rats. The disease-causing bacterium is spread by fleas, hopping rat to rat as the outbreak expands through the rodent ecosystem, sometimes haphazardly biting and infecting humans: plague in a human being is fallout. When plague entered Western Europe from Central Asia in 1347, calling at Constantinople and then Messina, it seems to have communicated itself by shipboard rats and their fleas until, in the crowded cities of late-medieval Europe, the human and rat environments blended.

To the epidemiologically minded European of 1347, plague represented a sign of divine displeasure. Theories of the epidemic’s origin abounded, but controversy centered on the role of unbelievers in inviting divine wrath. Jews were particularly suspect. In cities and towns throughout Alsace, the Rhineland, eastern France, and Switzerland, Jews were slaughtered in great numbers throughout 1348 and 1349. Many of those who were not killed were expelled (most migrated eastward, swelling the Jewish population of Poland, a demographic shift that remained evident for six centuries, until the 1940s). Clement VI issued one Papal Bull in 1348, prohibiting killing and forcible conversion of Jews, and another later that year stating that Christians who attributed plague to the Jews had been seduced by the Devil. Noting that Jews were themselves affected by plague, Clement held the plague to be the result of a “mysterious decree of God,” not Jewish perfidy.

But papal mandates were not effective everywhere, and Jews died, often at the hands of minor prelates or feckless officials who whipped up the mob. If the avowed purpose of those pogroms was to extirpate unbelief and so end the plague, the more central motive was, it seems, mercenary: when Jews were killed or expelled, their property was taken over and their belongings expropriated. What happened then was a stark expression of 14th-century Europe’s central anxieties, its preoccupations with both faith and emerging forms of property: civic violence in the guise of disease control.

Then, the nickname: Whereas the word plague entered English early, appearing in Wycliffe’s Bible in 1382, Black Death seems to have been of later coinage. It appeared well after plague outbreaks had subsided from northern and western Europe, in the early 18th century. A Danish-language history of Iceland, published in 1758, was the first to use the term Black Death; there, it denoted the pestilential disaster that had put an end to the golden age of Icelandic literature. Black Death seems to have been printed in English first in a historical work by Mrs. Markham (Elizabeth Penrose) in 1823; it then appeared in an English translation of Herzer’s German medical text in 1833. Although some historians speculate that the adjective black merely referred to the patchy discoloration of a plague victim’s skin produced by hemorrhaging in the subcutaneous tissue, the history of the name suggests that it was meant to capture the calamitous nature of the event. After all, the word black often connotes evil, or nefariousness (e.g., blackguard, blackmail, black magic, black mass) and untouchability. For light-complected Europeans, an accusation of blackness might have been just enough aspersion to connote that the epidemic was the worst disaster ever to befall their forebears.

The point here is that, although the word plague is used to describe AIDS, and although some particulars of the epidemic narratives have changed (most of us no longer turn to ecclesiastical powers for authoritative claims about epidemic origin, for instance), the epidemic remains an account of disparate action and belief, and a complex one.

Modern science might seem to scotch all that, defining infectious disease as a simple matter of organism and host, invasion and response. But science is, in the big epidemic picture, just one of many sources of narrative. The molecular virology of the causative agent (HIV, its subtypes and strains), the immunologic phenomena (involvement of CD4 cells, macrophages, etc.), the epidemiologic statements about the probability of contracting the infection, and the prognoses offered by “evidence-based medicine” are themselves driven by other, quite unscientific concerns. We investigate the molecular genetics of disease because some people who are important in our society suffer from it; we recognize social determinants because we can identify a common symptom in those people; we look for signs of immune response because we believe that disease is a biologic entity, has a cause, can be investigated, and, somehow, handled.

In truth, there is no clearly demarcated biological disease divorced from the social narrative of the epidemic and, frankly, there is no science unless someone with money and political clout decides that there is an identifiable disease and that the social costs of doing nothing are greater than the price of research and intervention. No matter how sophisticated our molecular virology is (and it is, today), the virus is only part of the story of the epidemic.

Even in our scientific era, the names we give to epidemics reflect some of the concerns of the day: polio, the “summer plague,” caused public pools in New York to be closed, lest middle-class children share water with the Italian immigrant kids who were (erroneously) suspected of spreading the virus; syphilis, a “venereal” disease, was attributed to loose women at a time when young, unmarried women were leaving the home to enter the workforce; tuberculosis, the “white plague,” was ennobled by its associations with artistic ability and creative spirit, the adjective white at once distinguishing TB from the fearsome Black Death and reminding people of the fourth of the apocalyptic horses, the pale one, Death.

Naming the then-still-new disease acquired immune deficiency syndrome was a coup: the words sounded important but vague, with syndrome denoting a medical mystery, as it usually does; yet it had a conveniently innocentsounding acronym, AIDS. The new name first appeared in a Centers for Disease Control publication in September 1982. I wonder, still, if what was appealing about the name was how the long and confusing moniker collapsed so neatly into a word that sounds benign. I wonder, that is, if the name was a way for a befuddled and overwhelmed federal agency to say “not to worry.”

But worry we did, increasingly. Eventually the name came to represent the narrative. Conjoining the abbreviated name of the virus that is the necessary cause of the disease and the acronymic title of the affliction itself, AIDS officials introduced a new name, HIV/AIDS. To my knowledge, its first public use was at the International AIDS Conference in Stockholm in 1988; the new term appeared in three New York Times articles that summer and quickly became de rigueur. By 1994 it was in use in government publications, by 1995 in the titles of medical books.

HIV/AIDS elides the old distinction between cause and effect. The new, joint-tenancy name says that what we have here is more than either just infection with a virus or just a clinical condition. The observable face of the thing (the disease, AIDS) merges with the biological process underlying the phenomenon (infection with the causative virus, HIV). This elision is full of implications for how we tell ourselves to think about the epidemic. The term HIV/AIDS signals that the disease brings with it a unique form of stigma, a special kind of suffering. Implicitly, we would fail to recognize the unprecedented pain of this disease were we to call it simply “HIV disease” after the virus, or “acquired immune deficiency.” There is something apocalyptic about it: if calling an infection an infection is not revealing enough about its true nature, if describing a disease by its pathological signs will not do justice to the deep meaning of the condition, then there must be mysteries that we who are accustomed to empirical judgments cannot know. That it was scientists who rushed away from empiricism and toward HIV/AIDS reminds us that the agreed-upon narrative shapes the very investigation of the world of illness and health.

Then, either satisfyingly or confusingly, depending on how you look at it, there’s the slash—HIV/AIDS is a stylish designation, ready-to-go for a 24/7 culture. The slash turns the disease into a commodity, a handle by which we can grab a package of information. The product is the information; the information is a specific message. By camouflaging the distinction between infection with HIV and the illness AIDS, the slash makes infection itself the illness.

Since HIV infection has become synonymous with the illness, as the slash says, it is incumbent on us all to take actions to prevent transmitting the virus. In these times, those actions are prescribed, formulized, and themselves packaged in easy-to-remember short phrases whose launching needs no thought and whose landing on the ear is somewhere between hortative and imperative: “Get tested.” “Shoot safe.” “Be safe!”

One implication of that packaging might be that each of us must accept public responsibility for our private actions: it’s up to you whether we will all have to face peril. I wonder if the subtlest but most telling point of the slash, the packets of HIV/AIDS info, and the distillation of human behavior into two supposedly distinct phases, “safe” and “unsafe,” is meant to resolve what has always been a contentious matter in the intersection of disease and social life: the vexed clash of private behavior and public consequence.

Perhaps, then, AIDS marks the transition between the modern epidemic and the postmodern one. It is likely to be the last epidemic to wear modernism’s trappings—the grand narrative, the claim to the future—as did the ideologies of the last century. To some, AIDS can teach lessons, much as, in recent modern times, the Holocaust, Stalinism, colonialism, or Watergate has taught us lessons. But AIDS also embraces—indeed, expects us to have— a capacity to act in a new way. Instead of filling the pest houses or cordoning off cities, as in epidemics past, we should know the risks and modify our own behavior. Perhaps, too, we should redirect desire. If there is a postmodernism to AIDS, it is that the risk to society is now supposed to be in the control of the individual.

Individuals have been urged to take responsibility in many epidemic crises before. Indeed, the history of public health in America is a history of exhortation to individuals to lift themselves out of unhealthy (or immoral) habits. In August 1849, President Zachary Taylor declared a national fast day for citizens to pray for relief from cholera. Temperance and cleanliness were urged as preventives against tuberculosis, diphtheria, and a host of other communicable conditions in the 19th and early-20th centuries (the remnants of those campaigns remain today in ordinances passed in the early 1900s against spitting on the streets; these laws were attempts to stop the spread of TB by controlling expectoration).

The difference between AIDS and previous epidemics is that, with them, the locus of danger was presumed to lie outside the individual. Like the carrying of flowers (the “pocketful of posies”) to ward off allegedly plaguebearing vapors in 17th-century London, hygiene and temperate habits were meant to keep out the attacking illness. With the reframing of AIDS risk around personal behavior, the source of the problem came to lie within each of us. Disease, or at least disease risk, became a matter of personal resolve.

The language reflects this radical shift toward individual responsibility for disease. We are supposed to offer AIDS messages (Be safe!) to our children, not messages about AIDS.We should not contract AIDS fatigue, that is, we must not relax our vigilance about risk. A recent campaign by the American Foundation for AIDS Research (amFAR), sponsored by Kenneth Cole, claims “We All Have AIDS—If One of Us Does.” We know that Richard Gere, Archbishop Tutu, Tom Hanks, and Nelson Mandela, who appear in the ad, don’t have AIDS—but we are meant to understand that they might, we might, anyone might, and therefore we are all responsible for doing something (What? Buying Cole’s clothing and shoes? Donating to amFAR?), or more people will die. This is both true and deeply misleading (hardly shocking—it’s an advertisement, after all). We are responsible to ourselves, of course, and might wish to adjust our sexual behavior to avoid contracting dangerous viruses. But not just anyone might have AIDS. The disease does discriminate. Globally, it is the poor who bear its burden, because (as Defoe knew), the poor cannot control the circumstances of their lives; the affluent can.

Is it also true that our decisions about how to act in private are of grave consequence to society? It is rare for an outbreak of HIV to depend on the sexual behavior of one individual. Typically, outbreaks of HIV infection occur in three situations: when large sexual-contact networks of several infectious individuals overlap; when an HIV-infected drug user joins a network of users sharing injecting equipment, thereby contaminating multiple syringes and turning them into vectors capable of transmitting HIV to many others; and when many people are having sex with very many others, as in the gay bathhouse scene of the 1970s and early 1980s. Context matters.

The AIDS narrative that emphasizes behavior and not context is not the work of any one person or organization. The AIDS narrative follows a central thread of society’s contemporary account of its own health. The contemporary epidemic story focuses on the role of the individual as an agent capable of harming all of us.

In public health, the era of the new narrative had already begun by the middle of the 20th century. While reformers and religious charities had talked about individual accountability for illness throughout American history, government did not take that up as a public-health policy until the late 1940s. Led by physicianepidemiologists concerned about the new prominence of heart disease and cancer as causes of death, health officials’ interest after World War II shifted away from the social crisis of epidemic contagion. Polio vaccine, licensed in 1955, culminated the last great national effort to control any infectious illness of social import in the United States. The gaze of America’s health agencies in the postwar era fixed on elements of behavior, such as smoking and diet.

Epidemiologic studies begun in the middle of the last century led to the demonstration that cigarette smoking is strongly associated, statistically, with the development of lung cancer. The Framingham, Massachusetts, study of heart disease, launched around the same time, sought to link personal attributes, including blood pressure and diet, to the probability of cardiovascular death. What epidemiologists began to do is define behavior in terms of the chances of illness; an epidemiologist calls that risk.

If we seek to know why nonevents having to do with AIDS—that single anomalous case in New York in 2005, for instance—can arouse emotional and sometimes effusive responses, we will have to ask how we have told the stories of AIDS risk.

In America, alleged misbehavior is at the heart of the narrative of vulnerability and risk, and the root of misbehavior is often sex. Public discussion of AIDS, in the early 1980s, began with unsubtle attacks on homosexuality: to call a disease the “gay plague,” as Philadelphia’s Daily News, the Toronto Star, London’s Sun, and a number of other newspapers did in 1982, or the “homosexual plague,” as Newsweek put it, could only imply that people who were recognizable by their sexual proclivities had become the victims of their own devices (which were suspect to begin with).

The White House at the time said little one way or the other. Research funding was a long time in coming and, when it did, it was heavily weighted toward benchtop science rather than community or economic initiatives. The swine-flu fiasco of 1976–77 had raised consciousness about the dangers of too-active federal involvement in forestalling epidemics that might turn out to be chimeras. And, by the second Reagan inauguration in 1985, AIDS had established itself as a disease of the distinctly unpopular.

Homosexuality was unpopular long before AIDS, but the post-Stonewall efflorescence of male-male sexuality embodied in a kind of multiplicative sexual adventuring in the bars and bathhouses of San Francisco and New York courted more than moral disapprobation: beyond violating biblical injunctions, it also stormed a bulwark of modern American conservatism; it expropriated sexual license from the socially privileged. If the right to have sex with many different people and not be bothered about it by the media (or moralists) is a perquisite of aristocracy, the gay movement was a palace revolution.

Politically, therefore, AIDS was useful to the protectors of the status quo. The narrative of perilous modernity, from which the Right gains so much strength, resounded loudly when AIDS appeared to decimate the very groups that seemed to refuse to buy into the old norms. Conservative politician Patrick Buchanan said of AIDS, in 1983, that “the sexual revolution has begun to devour its children” and inveighed against the “homosexuals . . . [who] have declared war against nature.” The anathema was disconcerting but, given the tenor of public dialogue in the Reagan years, hardly surprising.

But conservatives have been only one voice in what has always been a complex and multicharacter plot. Progressives, the very people whose sentiments most favored sexual openness, came to call for sexual restraint. We public health professionals, eager to have something to offer to the millions of people who appeared to be vulnerable to AIDS, and anxious to distance ourselves from conservatives who read in the AIDS story a moral of the evil of sexual license, discovered the condom.

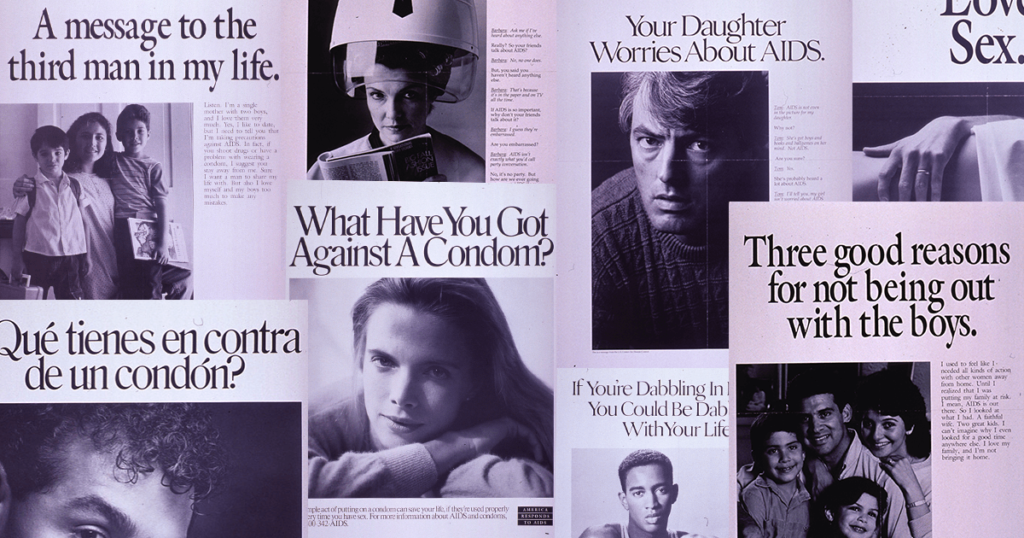

Long before AIDS—before even the identification of viruses as disease agents—Nietzsche wrote that when a “chance event” brings harm to a community (mentioning storms, crop failure, and plague), “the suspicion is aroused that custom has been offended in some way or that new practices have to be devised to propitiate a new demonic power and caprice.” As Nietzsche had predicted, when AIDS reached the scale of community crisis, people seized on new practices. There was William F. Buckley’s recommendation to tattoo the infected. There were all sorts of snake-oil treatments. And there was the condom. Progressive public-health advocates rescued the condom from its role as prop in a bunch of bad jokes and made it the standard of our own crusade.

The condom campaign was especially appealing because it offered a middle road. It was a way between the two sides battling in the early 1980s: those who urged homosexual men to stop having so much sex with so many other men—Dr. Joseph Sonnabend’s cautionary article in the New York Native in September 1982 was called “Promiscuity Is Bad for Your Health”—and those who argued that frequent-partner-change sex was emblematic of gay identity and could not be sacrificed.

With the public-health crusade gathering force, “safe sex” became a kind of substitute prayer in the AIDS storm, the condom a kind of icon. Perhaps it was natural that gay men, ill-disposed toward those churches that explicitly made them unwelcome in those days, led the way in elevating certain practices to a kind of sanctity. Not long ago, a student recounted movingly to me that he, as a gay Catholic entering adulthood in New York just as AIDS was making its debut, had found that educating others to avoid AIDS through safe sex was a substitute for the love he had previously experienced in the Church but, by the 1980s, no longer could feel. No doubt, the Church of Risk Reduction— safe sex, condoms, freedom through restraint—offered relief from the horrific devastation wrought by the new disease with a new unity in faith, the collective belief that the new practices would ward off further suffering.

But when we progressives in the public-health field embraced the safe sex campaign, we also implicitly endorsed the message of individual accountability that the health narrative of the day proffered. Resisting moralism about homosexuality and promiscuity, we instead promoted a new dogma about individual responsibility: Safe sex. Personal risk reduction.

Condoms do prevent the transmission of HIV if used properly, and such use probably helped stem the advance of HIV in the United States. But we need not overestimate how much it helped, population-wide. Condoms probably have had some impact on HIV transmission among young gay men, but reduction in the rate of partner change was much more important. Condoms only slightly slowed the advance of HIV in other populations in the United States. Very few adults, even in the AIDS era, use a condom all or even most of the time (for the expected reason: it doesn’t feel good, and the reason most people have sex is that it feels good). Occasional use of condoms, even by the majority of a population, will result in only a minimal reduction in the rate of new HIV infections when, as is true for a large majority of Americans, very few sexual encounters are with people who have infectious HIV.

While condoms are well advised to a man, woman, or teen about to have sex with someone who might carry dangerous germs, or to a person who is infected and wants to make sure that his partner is safe, the public campaign about condoms has probably had more of an impact on how we talk about AIDS than on the overall spread of the virus. And safe-sex advocacy seems to have budged the moralists’ account of the AIDS epidemic only slightly.

The moralists’ account, the offense to custom, the new practices—all, I mean to say, are our account. The similarities between the public debates about AIDS today and those of the early years of AIDS can be shocking, given how much more open America has become about sex in the meantime. The term gay plague is no longer acceptable (Jerry Thacker, appointed by George W. Bush to the Presidential Advisory Council on HIV and AIDS, was forced to resign in 2003 after reporters noticed that his Web site used the term gay plague for AIDS). Homosexual couples want to marry. In Lawrence v. Texas (2003), the Supreme Court essentially overturned its own 1986 ruling in Bowers v. Hardwick, thus rendering state antisodomy laws unconstitutional. Plague fantasies about hordes of priapic gay men rampantly shooting HIV into each other are antique and are virtually never aired anymore. Yet, when the New York City AIDS case was publicized last year, the accompanying indictments were not offered purely by homophobes, the clergy, or even those social conservatives whose condemnations had been part of the AIDS discussion from the outset. Gay leaders, sex-advice columnists, and publichealth officials cautioned restraint. The mayor, as I mentioned, talked about sin. “Lives are being put at risk by reckless behavior,” an editor of an AIDSfocused magazine said. The author of a history of gay New York told The New York Times that “gay men do not have the right to spread a debilitating and often fatal disease.” In 15 states, acts that are already criminal, such as assaulting a law officer or patronizing a prostitute, carry an additional sentence if the perpetrator knows he or she has HIV. Sexual accountability and civic responsibility seem to blur together. Misbehavior might not be a sin, but it can be a crime.

The male who is sexual or sexualized in ways that do not fit the standard model is in a discomfited position today. Americans seem to tolerate fun-loving but reckless playboys—movie stars, rock musicians, ballplayers—as long as they are bourgeois, heterosexual, and white. But we are profoundly unsettled when we learn of sexual high jinks by a gay man, a dark-skinned man, or a poor man. AIDS, we have seen, can turn that discomfort into accusation. The uproar that arose from the case of the much-publicized—and, not incidentally, African-American—“sexual predator” Nushawn Williams in 1997, accused of knowingly spreading HIV and prosecuted for it, ignored the larger truth that many people who have HIV continue to have intercourse, and the smaller truth that other HIV-infected men had pretty clearly done so without protection and with more knowledge of the danger. The Williams story followed a narrative thread of sexual misbehavior that is sadly customary in America: young black man, sex with many white women. Infection was invoked as the main instance of offense. The headlines followed.

Perhaps it should be no surprise that the New York furor of 2005 had as its subject a man who had quite a lot of sex with other men and used drugs. We were told repeatedly that he had sex even after he knew he had HIV. The story about this man’s sexual activity was reiterated in every news report of the case, hyped with terms like “drug-fueled sex” and “multiple, anonymous partners.” Was the public meant to be alerted, as the health commissioner intended, or alarmed (or revolted)? Sometimes the story of the case, as retold by the media, seemed to be not about AIDS per se but rather about satyriasis, profligacy, disregard for the standards of sexual decorum. If it was a parable about depravity, it was perfectly cast: the sexual mischief attributed to the man in question rendered him—or, at least, the character that was implied to newspaper readers and viewers of the 10 o’clock news—both fascinating and dreadful at once and lent the story more than a modicum of sexual titillation. There would have been no public drama otherwise.

Without AIDS, how would we tell ourselves these stories about ourselves? Where would we settle our unease about sex (and drugs), our conflicts over civic responsibility and self-indulgence? And, maybe most important of all, how would we deal with our sometimes alienating modern world, our minds swarming with unidentifiable dreads and misgivings? How, that is, would we give voice to our anxieties about contemporary society unless we could wrap them up and externalize them in risk? AIDS offers us a page on which we can write the story.We can convince ourselves that we are making sense of risk. We are correctly interpreting the science. We are making decisions rationally. The mysterious and unpredictable world, with its germs and its illogic and sudden death, seems to make sense in the AIDS narrative.

That AIDS is as far as ever from ordinariness does not mean that Sontag was wrong. Nor have we failed with AIDS, although our leaders surely do lapse from time to time, in both learning their lessons from history and serving the public. No, AIDS cannot be ordinary because human societies always account for disease and death as a story. We need epidemics in order to work out the narrative of who we are.

There will be other incidents, more scares about “new, virulent strains,” and further pronouncements about sex and sin. It is part of the story of AIDS, our story of ourselves, the American version of America. One day, probably a long time from now, there will be a new epidemic, a new set of metaphors to illustrate the tale of what we find most remarkable about us. Perhaps avian flu, with its self-evident opportunities for putative augury and its pushing of our Hitchockian buttons about the treachery of birds, will be that epidemic—although I think that it will not; it will take a virus both better adapted to humans and harder to prevent with a vaccine. That next great epidemic, whatever it is, once it comes—when it comes—will change the narrative of AIDS. We will no longer need to write our myths and moral hopes into the AIDS story. Then, but only then, AIDS will indeed become ordinary.